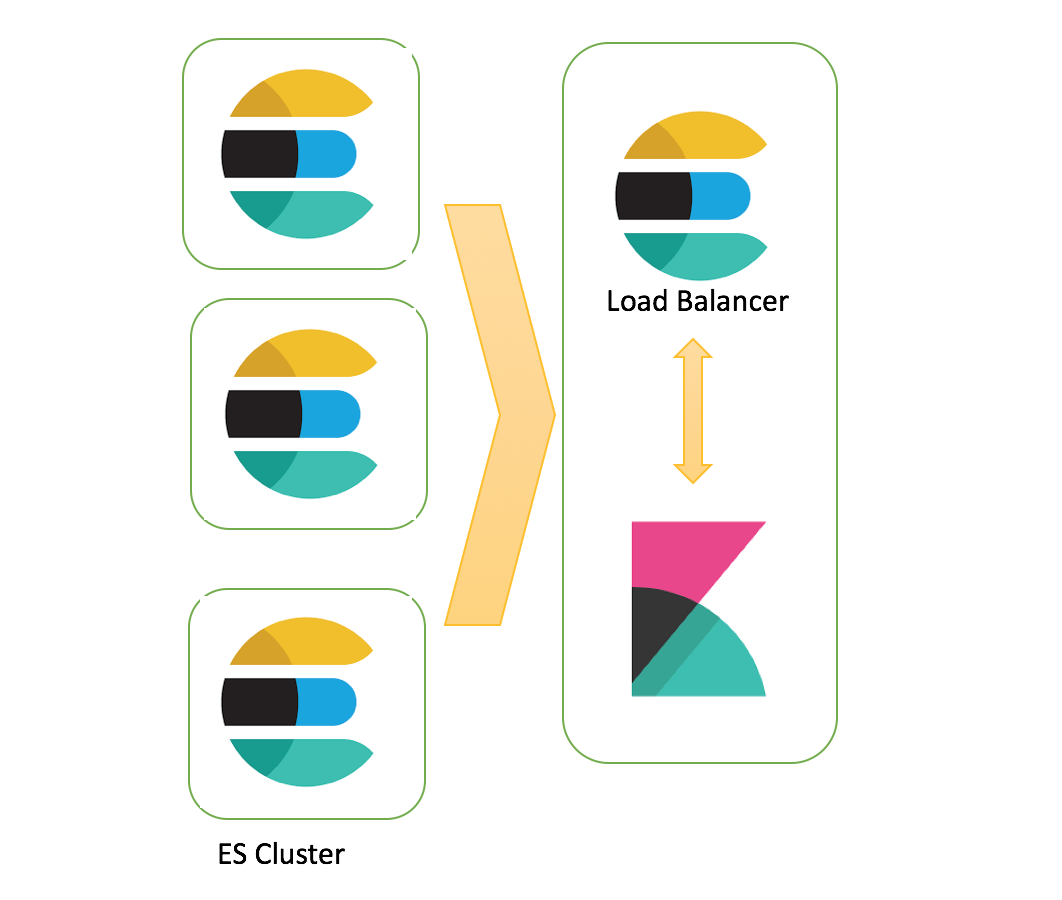

Kibana & elasticsearch: Load balancing across multiple ES nodes

If you've been using Elastic stack, you've probably faced the issue of using kibana with multiple elasticsearch instances and got the frustrated answer: NO, kibana only support one Elasticsearch instance to use for all the queries. Many issues have been opened on github and asked on the Elastic Forum on this subject. Today, I want to share with you a pragmatic way to solve this issue.

Load balancing across multiple ES nodes

I've tried many alternative solutions such as setting up a DNS alias or using HAProxy as load balancer, but the experience was not so seamless.

What we can do instead is what the official kibana documentation suggests by using an Elasticsearch Coordinating only node (or client node) on the same machine as Kibana. this is by experience the most easy and effective way to set up a load balancer for ES nodes to use with kibana.

Elasticsearch Coordinating only nodes are essentially smart load balancers that are part of the cluster. They process incoming HTTP requests, redirect operations to the other nodes in the cluster as needed, and gather and return the results

Set up

- Install Elasticsearch client node on the same machine as Kibana.

- Configure the node as a Coordinating only node by setting

node.data,node.masterandnode.ingesttofalseInelasticsearch.yml.

node.master: false

node.data: false

node.ingest: false

- Add client node to elasticsearch cluster.

cluster.name: "my_cluster"

- Update transport and HTTP host configs in

elasticsearch.yml. Thetransport.hostneeds to be on the network reachable to the cluster members and thenetwork.hostis the network for the HTTP connection for Kibana (localhost:9200 by default).

network.host: localhost

http.port: 9200

transport.host: YOUR_IP

transport.tcp.port: 9300

- In

kibana.yml, theelasticsearch.urlshould be set tolocalhost:9200, which means that kibana point to client node.

elasticsearch.url: "http://localhost:9200"